简易CNN的实现(with Pytorch)

记录一下第一次实现一个CNN。。。

其实代码也挺简单的。。。尤其是有Pytorch这种框架。。。

### 导入各种库

import torch

import torch.nn as nn

import matplotlib.pyplot as plt

import numpy as np

from torchvision import datasets,transforms

### 使用Cuda加速。。。我是在Google的Colab上测试的,可以使用GPU加速。。。

device = torch.device('cuda:0' if torch.cuda.is_available() else 'cpu')

### 下载人尽皆知的MNIST数据集,注意中间那个transform部分,因为原来的数据好像使用numpy格式的,需要转换一下

train_loader = torch.utils.data.DataLoader(

datasets.MNIST('./data', train=True, download=True,

transform=transforms.Compose([

transforms.ToTensor()

])),

batch_size=100, shuffle=True)

test_loader = torch.utils.data.DataLoader(

datasets.MNIST('./data', train=False, download=True,

transform=transforms.Compose([

transforms.ToTensor()

])),

batch_size=1000, shuffle=True)

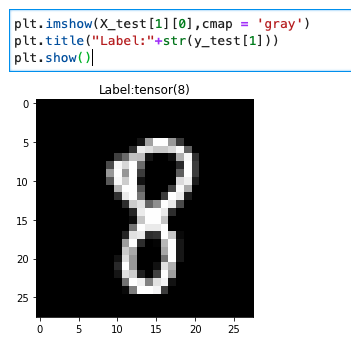

展示一下MNIST的图片及其label:

训练CNN

class CNN(nn.Module):

def __init__(self, num_classes=10):

super().__init__()

self.layer1 = nn.Sequential(

nn.Conv2d(1, 16, kernel_size=5, stride=1, padding=2),

nn.BatchNorm2d(16),

nn.ReLU(),

nn.MaxPool2d(kernel_size=2, stride=2))

self.layer2 = nn.Sequential(

nn.Conv2d(16, 32, kernel_size=5, stride=1, padding=2),

nn.BatchNorm2d(32),

nn.ReLU(),

nn.MaxPool2d(kernel_size=2, stride=2))

self.fc = nn.Linear(7*7*32, num_classes)

def forward(self, x):

out = self.layer1(x)

out = self.layer2(out)

out = out.reshape(out.size(0), -1)

out = self.fc(out)

return out

model = CNN().to(device)

loss_func = nn.CrossEntropyLoss()

optim = torch.optim.Adam(model.parameters(),amsgrad=True)

n_epochs = 10

for i in range(n_epochs):

for batch_idx,(X_train,y_train) in enumerate(train_loader):

X_train = X_train.to(device)

y_train = y_train.to(device)

y_hat = model(X_train)

loss = loss_func(y_hat,y_train)

optim.zero_grad()

loss.backward()

optim.step()

print('{},\t{:.2f}'.format(i, loss.item()))

用的是最简单的CNN,参考自这篇paper(O’shea, K., & Nash, R. (n.d.). An Introduction to Convolutional Neural Networks.),也就是有两个layers,每个layer都先经过一次Convolution,在把得到的matrix经过一次ReLU函数变换,然后再经过一次Max pooling,最后一个Linear的变换就好了。(中间调参部分还不太会。。。抄的官方的tutorial。。。

当然中间的model部分可以直接一个nn.Sequential写好几层layer。。。但是感觉没有继承nn.module重写一个类直观,注意__init__方法里面要调用一下父类的__init__方法

Model测试

correct = 0

total = 0

with torch.no_grad():

for data in test_loader:

images, labels = data

images = images.to(device)

labels = labels.to(device)

outputs = model(images)

_, predicted = torch.max(outputs.data, 1)

predicted = predicted.view(1000)

correct += (predicted == labels).sum().item()

total += labels.size(0)

print('Accuracy of the network on the 1000 test images: %d %%' % (

100.0 * correct / total))

用1000张测试图片计算Accuracy,用我跑出的模型来看正确率在99%左右,符合CNN的预期。

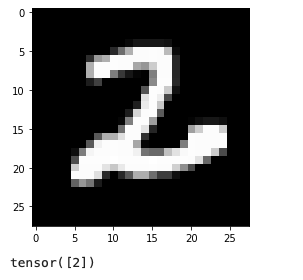

还可以拿张图片来看看:

plt.imshow(X_test[2][0],cmap='gray')

outputs = model(X_test[2].view(-1,1,28,28))

_,predict = torch.max(outputs.data,1)

plt.show()

print(predict)

结果: